Week 8: What Makes a Room a Room?: Towards an Effortless Scanning Experience through Innovative Gamification

Abstract

Long story short, we now have a working multi-player game with meaningful user interactions. In other words, we did everything this week.

We could have probably managed our time better, but what’s the use dwelling in the past? It’s done! And hey, it looks like we are on track for our Week 8 milestone, it’s as if we were never behind!:

“Week 8: working demo for game, polish application using high-quality model.”

Key Contributions

- Fixed Protobuf memory leak which prevented the sending of very large meshes.

- Implemented automatic alignment of Hololens client coordinate systems. Meshes that are sent to the server are fully aligned using a modification of the Hololens anchor points.

- Designed and implemented algorithm for automatic detection of areas in need of scanning.

- Implemented generation of targets at areas which need scanning

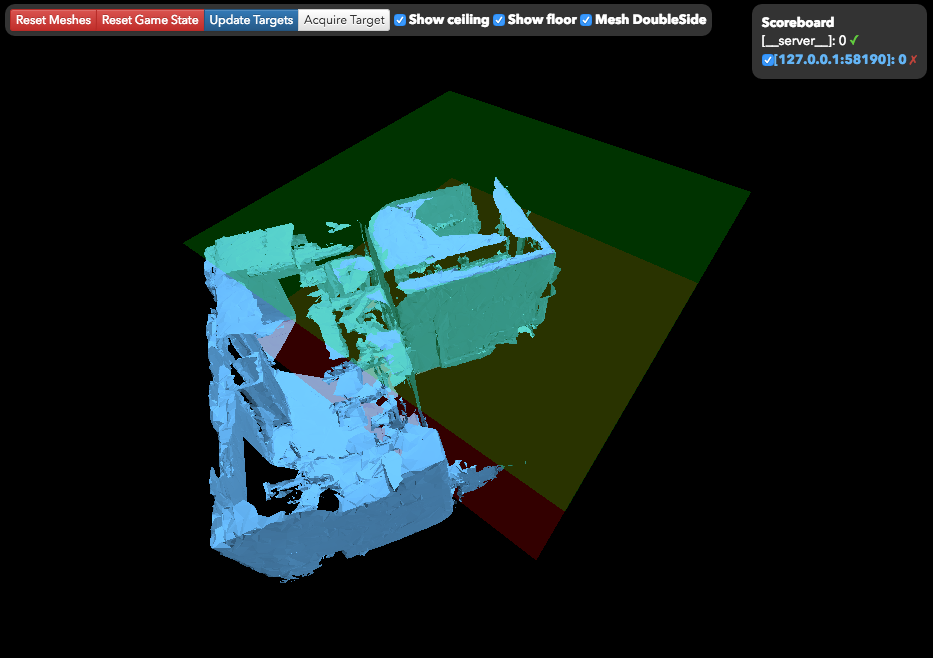

- Implemented a much improved visualization tool that shows real-time updates of the game state, scores, target positions, Hololens device positions, and individual meshes color-coded by source.

- Made the game so pretty with animations, sounds, and displays. And I mean so pretty. I don’t have any pictures to show you, but just take my word for it.

Breakdown

- Orb placement in clients

- Spatial Sound

- Robust networking across devices to handle different types of messages and prevent overloading server.

- Processing meshes in a way that allows delay-free sending of new targets when required.

- Game state synchronization with server

- Anchor-based alignment of clients

- Anchor-based sent mesh alignment

- Procedural music generation

- More exciting target made from particle effects

- Found target particle animation

- Found target sound confirmation (different for when found by other users)

- In-game HUD, which is placed 2 meters in front of each player whenever the score changes. It is fixed to that location and persists for three seconds.

- Mesh hole-finding: will compare the number of faces for each point in a 2D top-down projection of the scene.

- Candidate target generation: regions with less or no faces are those which will have targets

- Yielding processing of mesh in a coroutine to allow for super smooth framerate.

- Enabling the cursor only when looking at the target.

- Cursor turns green when close enough to select the target. Red when too far away.

- Disabled ray-casting to anything other than the object. This saves some computation.

- Sending Hololens positions to the server to track them in the game state

- Performing new target generation only when the mesh is sent and only sending them when they are requested by clients.

- Dashboard (rendering current targets, showing color-coded meshes, control passing).

- Bindings for Meshlab from Bash script.

- A bunch of other things, but you know how when you’ve done a lot of work, you forget about a bunch of the earlier stuff you worked on? I’d probably have to look through the code again to remember the rest. But this list is a pretty good starting point, you know?

Demo Plan

Before we get into the nitty-gritty, here’s a play-by-play of the demo next week:

- People put on Hololens.

- They’ll see a menu that explains a couple things about the game, and tells them to find and tap the orb to start. There will be a single orb placed in the center of the game area that everyone will share.

- When the orb is clicked, the HUD will indicate that we are waiting for the other players.

- Once the other players have clicked the orb, the game will start.

- People will go around finding and clicking orbs.

- Once a certain number is found, the server will end the game, and each client will begin to display a sequence of messages revealing the true intentions of our demo.

- The app will also show a glimpse of the mesh around the user.

- We show them a replay of what they went through on our server visualization tool, and they are wowed by the scanning and tracking or whatever.

- We restart the app, reset the gamestate on the server, and restart the session manager and are ready for our next demo.

Technical Details

Gameplay

Click on an orb, and it will explode and make a cool sound. Everyone sees the same orbs. Find the orbs before the others do. A lot of work went into getting this to be synchronized across devices, rendered efficiently, and looking pretty. It’s really not interesting to read about, though, so we’ll spare you the trouble by not writing about it.

Procedural Music Generation

From a set of pitched sound clips of a harp playing different notes, we wrote a script which will randomly walk up or down the scale in increments of at most 2. The script also randomizes the pace at which the notes are played, sometimes playing groups of two or three notes in a row. This keeps things interesting, and makes the soundtrack not get boring like a looped song might.

Candidate Target Generation

The problem of hole-finding is a classic task in the field of Holoscanning. We present Amazing Hole Finding Heuristic (AHFH) – a novel hole-finding algorithm which successfully generates candidate targets in locations proximal to holes in the scanned mesh provided by Hololens devices.

Computing a Global Map

In order to generate candidate region proposals, we must first have a sense of what area the entire mesh encompasses. A trivial solution would be to compute a convex hull of the current mesh. However, using a convex hull would allow targets to be generated outside the navigable vicinity of the players.

We propose a novel approach which uses advanced graphics and image processing techniques to ascertain the concave hull of the mesh. Our method is as follows:

- Render the mesh from a bird’s-eye viewpoint.

- In order to efficiently render the mesh we use the advanced rendering pipeline Python Imaging Library for end-to-end rendering of the meshes.

- For maximal efficiency, our input layer for the rendering architecture takes in a unified data encoding: triangles.

- Perform a morphological dilation of the resulting mask then immediately perform a morphological erosion of the resulting mask using a kernel size of 0.04 times the smaller dimension of the mask.

- Perform an additional morphological erosion using a tunable hyperparameter in order to ensure that the mapped area is contained within the user’s navigable arena.

- Before hole filling

- After hole filling

Computing Candidate Areas

We perform the same steps for faces only near the floor plane and only near the ceiling plane but without the dilation/erosion hole filling step. By taking the complement of these floor and ceiling maps and intersecting it with the global map, we obtain areas which have holes.

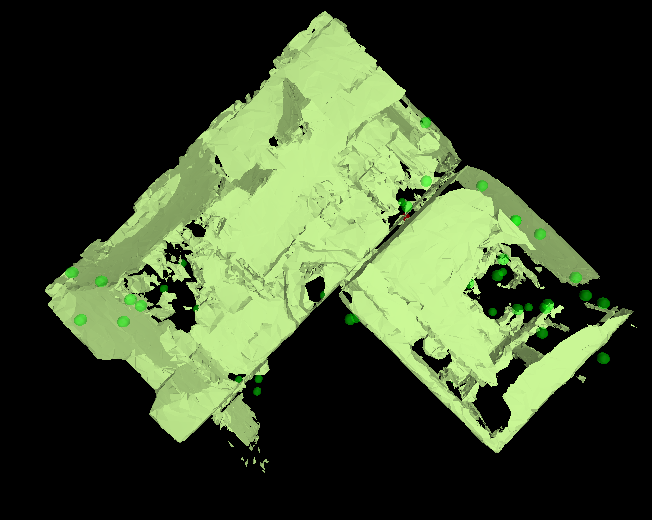

- Computed floor map

- Computed candidate target regions near floor

- Computed ceiling map

- Computed candidate target regions near ceiling

Generating Targets

We generate targets by uniformly sampling the candidate areas.

- Example targets generated and rendered in the dashboard.

Pushing Targets

Since targets may be generated in close proximity, we ensure that the user explores the world outside their comfort zone by pushing targets reasonably further away™ from the last target acquired.

Dashboard

We present a web-based graphical user interface (GUI) for tracking the state of the game and scanning in real-time. This allows a game master (GM) to control and track the flow of the game. Targets may be manually acquired should be fall outside the reach of players.

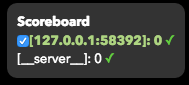

The dashboard shows the current score, ready status and IP address of the players. A green check mark indicates that the player is ready. The checkbox toggles the visibility of the player’s mesh.

Server-Client Interaction

Our system does not generate targets until all connected Hololenses have indicated that they are aligned and ready to start the game. This status can be tracked in real-time through the dashboard interface.

Future Work

- Aleks, Edward

- Player scenario for smooth transition into gameplay.

- Aleks, Edward, Keunhong

- End game determination and communication.

- Real-time tracking of player locations.

- Hamid, Keunhong

- Replay generation.

- Glue mesh refinement to server.

- All

- Improve polish and robustness of system.